Detection for English Alphabets

Sign Language Recognition Model is a machine learning system designed to accurately identify and interpret hand gestures representing letters of the American Sign Language (ASL). Utilizing advanced deep learning techniques, this model translates visual sign language data into readable text, enhancing communication accessibility.

This model is designed to recognize American Sign Language (ASL) gestures from images and translate them into uppercase letters. It processes images through a deep learning architecture (like MobileNetV2), extracts features, and classifies gestures into one of 26 letters, providing both the predicted letter and a confidence score.

Static Image Sign Language Recognition

Predicted Sign:

Confidence:

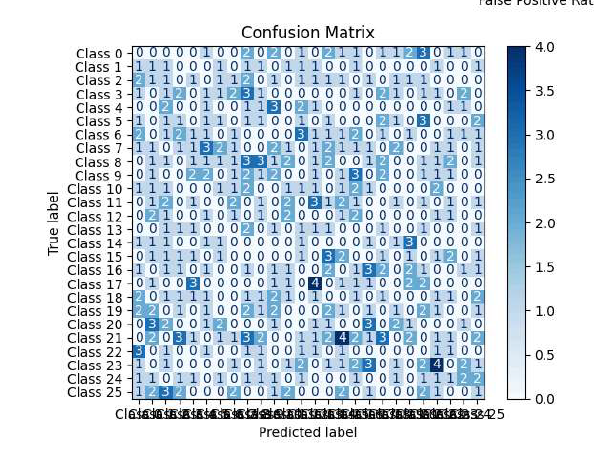

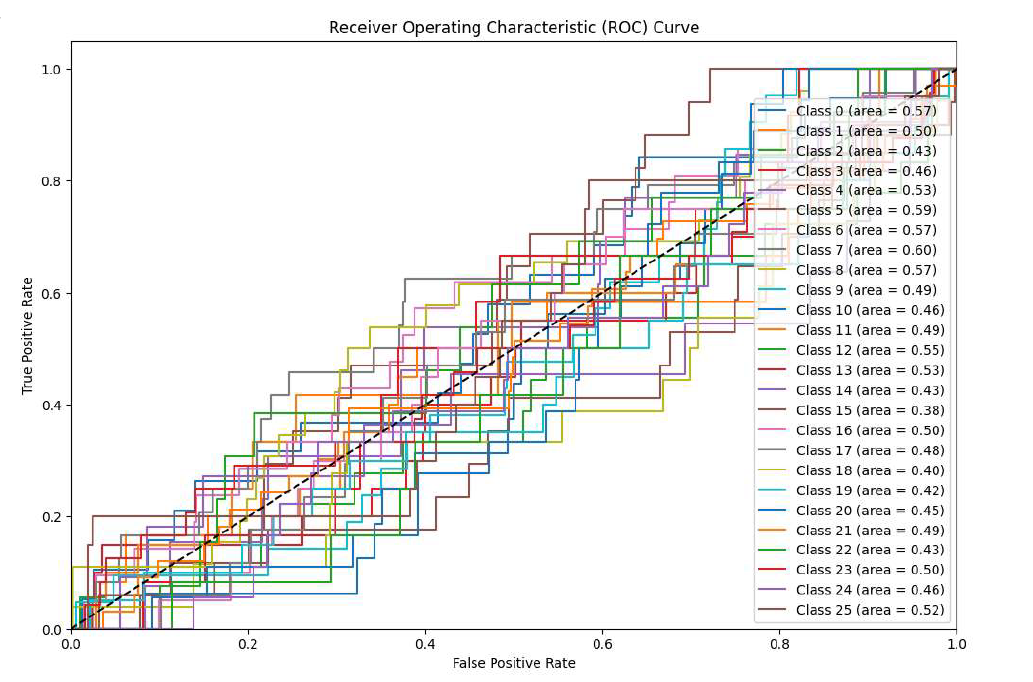

Graphs & Metrics

Accuracy Score: 83%